From Tim Harford’s conclusion to his brilliant “How to make the world add up” :

Superegos for the digital world

From Tim Harford’s conclusion to his brilliant “How to make the world add up” :

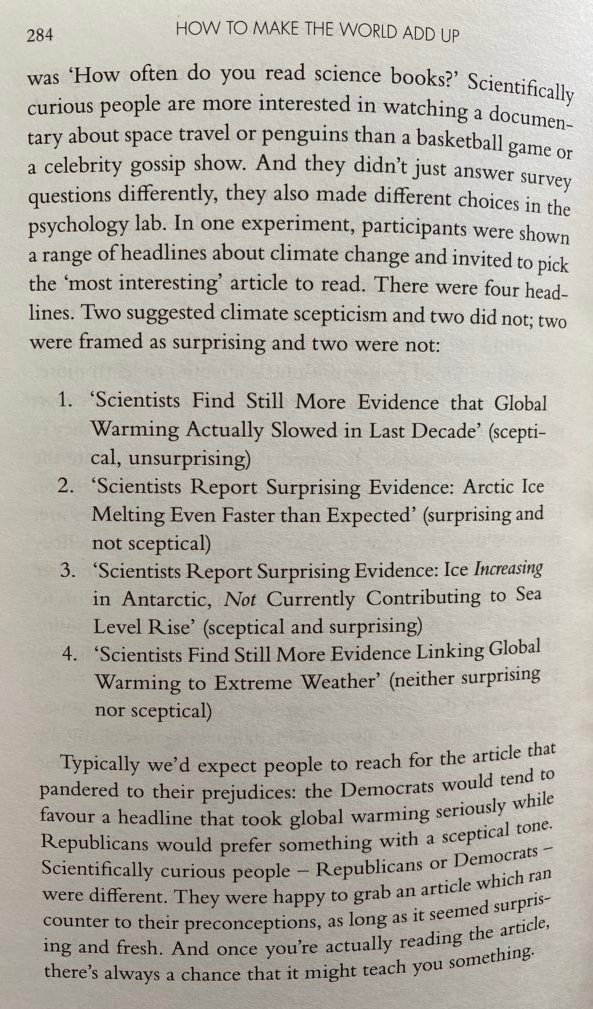

From Tim Hartford’s “how to make the world add up” : a study by Dan Kahan showed that scientific literacy actually reinforce biases and tribalism BUT ‘scientific curiosity’ reduces them! The more curious we are the less tribalism influences our views, and thankfully there is no correlation between curiosity and political affiliation so that trait is present across the political spectrum.

The book by Nate Silver “The Signal and the Noise …” is an amazing read. Very well written, entertaining as well as deep, it holds lessons and learnings that are applicable in our daily personal and professional lives. Its stated purpose is to look at how predictions are made, their accuracy, in several fields : weather, stock market, earthquakes, terrorism, global warming … But beyond that simple premise, it is a real eye opener when it comes to describing some of the deeply flawed ways in which we humans analyze the data we have at hand, and take decisions.

Nate Silver has very skeptical towards the promises of Big Data, and believes that the exponential growth in available data in recent years only makes it tougher to separate the grain from the chaff, the signal from the noise. One of the way he believes we should strive to make better forecasts, is to constantly recalibrate our forecasts based on new evidence, and actively test our models to improve our predictions and therefore our decisions. The key to doing that is Bayesian statistics … This is a very compelling, if complex, use of the Bayes Theorem, and it’s detailed through a few examples in the book.

As he explains, in the field of economics, the US govt publishes some 45,000 statistics. There are billions of possible hypotheses and theories to investigate, but at the same time “there isn’t any more truth in the world than there was before the internet or the printing press”, so “most of the data is just noise, just as the universe is filled with empty space”.

The Bayes Theorem goes as follows :

P(T|E) = P(E|T)xP(T) / ( P(E|T)xP(T) + P(E|~T)xP(~T) )

Where T is the theory being tested, E the evidence available. P(E|T) means “probability of E being true if we assume that T is true”, and notation ~T stands for “NOT T”, so P(E|~T) means “probability of E being true if we assume that T is NOT true”.

A classical application of the theorem is the following problem : for a woman in her forties, what is the chance of her having a breast cancer if she had a mammogram indicating a tumor ? The basic statistics are the following, with their mathematical representation if T is the theory “has a cancer” and E the evidence “has had a mammogram that indicates a tumor” :

– if a woman in her forties has a cancer, the mammogram will detect it in 75% of cases – P(E|T) = 75%

– if a woman in her forties does NOT have a cancer, the mammogram will still erroneously detect a cancer in 10% of cases – P(E|~T) = 10%

– the probability for a woman in her forties to have a cancer is 1.4% – P(T) = 1.4%

With that data, if a woman in her forties has a mammogram that detects a cancer, the chance of her actually having a cancer is of …. less than 10% !!! That seems totally unrealistic – isn’t there an error rate of only 25% or 10% depending how you read the above data ? The twist is that there are many more women without a cancer (98,6%) than women having a cancer at that age (1.4%), so the number of erroneous cancer detections, even if they represent only 10% of the cases where women are healthy, will be very high.

That’s what the Bayes theorem computes – the probability of a women having a cancer if her mammogram has detected a tumor is :

P(T|E) = 75%x1.4% / ( 75%x1.4% + 10%x98.4% ) = 9.6 %

Nate Silver uses that same theorem in another field – we have many more scientific theories being published and tested every day around the world than ever before. How many of these as actually statistically valid ?

Let’s use the Bayes theorem : if E is the experimental demonstration of a theory, and T the fact that the theory is actually valid, and with the following statistics :

– a correct theory is demonstrated in 80% of cases – P(E|T) = 80%

– an incorrect theory will be disproved in 80% of cases – P(E|~T) = 20%

– proportion of correct to incorrect theories – P(T) = 10%

In that case, the probability of a positive experiment meaning a theory is correct is only of 30% – again a result that goes against our intuition, as it seems from the above statistics that the “accuracy” of proving or disproving theories is 80% !!! The Bayes Theorem does the calculation right, and takes into account the low probability of a new theory being valid in the first place :

P(T|E) = 80%x10% / ( 80%x10% + 20%x90% ) = 30 %

There again, events with rare occurrences (valid theories) tend to generate lots of false positives. And this results in real life in a counter-intuitive fact : at the same time as there is a huge proliferation of published scientific research, it has been found that two-thirds of “demonstrated” results cannot be reproduced !!!

So … this book should be IMO taught in school … It gives very powerful and non-intuitive mental tools to make us better citizens, professionals and individuals. I don’t have much hope of this making its way into the school curriculum any time soon, so don’t hesitate, read this book, and recommend it to your friend and family 🙂

I just finished reading the second book of The Kingkiller Chronicle by Patrick Rothfuss. After a fantastic first book, I was wondering if the author would disappoint or run out of steam. But he continues to weave a fascinating and deep tale.

The story on the surface is a very classic fantastic tale of a young man getting into a magic academy, in a typical medieval-fantastic setting. What differentiates it is the depth and details of that world, which are slowly unveiled, with great consistency. It is like seeing a rich tapestry getting woven in front of your eyes. The characters themselves are very deep, and multi-faceted.

Patrick Rothfuss uses classic themes of the genre like magic, fairies, or dragons, but subtly gives them his own twist, and keeps the readers always on their toes, never knowing when what seems familiar will become strange and mysterious.

I am really impressed. The only comparison that comes to mind is the Lord Of The Ring ! It is not (yet) a tale as epic as LOTR – probably halfway in that regards between The Hobbit and LOTR – but what it lacks in drama and scale, it more than makes up with more rich and complex characters, and a very modern read on their motivations and lives. One thing Rothfuss and Tolkien have in common is the place that languages, music and songs occupy in their stories.

I can’t wait to read the third book, which unfortunately will probably only come out in May ’13 !

The author’s theory is that the US have reaped the low hanging fruits of productivity, and that its future material and financial growth is at risk. He details three key areas that fueled past productivity, but will not drive progress going forward :

– access to free land, from the 17th century to the end of the 19th century

– improvements in education. The percentage of the population graduating from High School grew from 6% in 1900 to 60% in 1960, and 74% today. Only 0.25% of people went to college in 1900, a number which has grown to 40% today. But we seem to have reached the limit of these improvements, as college drop-out rates have grown from 20% in the 60s to 30% now …

– a host of technological breakthroughs from 1880 to 1940. These have slowed down since then, and as the author puts there is not much difference between a kitchen or a house) today and one in the 50s in terms of the basic functionalities that had then become available (fridge, TV …). 80% of the economic growth from 1950 to 1993 actually came from innovations that happened before that time.

The author links that last point with the drastic reduction in the rate of growth of the median income, starting in 1970. His view is that discoveries since then have been geared towards private goods rather than goods for the larger public. The impact of the Internet is much more complex though and there is a whole chapter on that, which I will comment on later.

There is a whole section then looking at how we have tended to overestimate productivity through the GDP calculations :

– government spending is always factored in the GDP at cost, regardless of the utility or value created. This does not take into account the fact that as government grows there will be a diminishing return on that value. Since the 19th century the cost of government (excluding redistributions) has grown from 5% of GDP to 15-20%, which means we have overestimated the GDP growth, and the productivity, derived from that growth in spending.

– there is a similar issue with Healthcare, which is 15% of GDP in the US. Its efficacy is impossible to determine, and there is an established disconnect across modern countries between the spend, and metrics such as average life span.

– same thing with Education, which represents 6% of US GDP. Reading and mathematics scores at the age of 17 have not changed since the early 70s, while the expenditure corrected for inflation has doubled per pupil.

The looming question that the author then tackles is of the impact of the Internet. To summarise, he says that the Web provides huge innovation for the mind, not for the economy. It makes us happier and enables personal growth, but does not impact the economy very much, as so much of the content is free or very low cost.

So the Internet is also not properly reflected in GDP and productivity metrics, and that is one area where GDP underestimates the positive impact of technological change.

The issue though with the Internet revolution are the following :

– we have been counting on real productivity and material economic impact to generate future revenues and pay off our debts …

– the benefits from that revolution are unequally shared. Using the Internet positively is a function of one’s cognitive powers, while past inventions were usable equally by everyone.

– it creates few jobs. Google 20 000 employees, Facebook 1700…

The author then goes into an analysis of the current Economic crisis, which he sees as a result of overconfidence across our society in productivity. I am not convinced that should be the only explanation, but this is at least a refreshing view and a new angle.

Looking forward, despite the gloomy title of his book, Mr Cowen sees some positive future trends :

– india and china growth will create larger markets that will reward innovations again. They have so far grown by imitating the west, they will probably fuel innovation in the future.

– Internet might start generating growth. It creates a “cognitive surplus” (Clay Shirky) as billions of people are getting smarter and better connected, which would have positive effects on innovation.

– the Obama administration has taken steps to reform education

He conclude with an appeal to raising the status of scientists in our society, to make science and technology aspirational and rewarding careers for our children … Could not agree more !

So before I give up reading it again, let me share the content of the piece I have managed to read through !

Steven Pinker (Wikipedia entry) analyses language as a window into the way we think. He starts by asking the profound question of ‘How do children learn language ?’, and especially focusing on how they know what NOT to say.

For example, one can say :

“I sprayed water onto the roses”

As well as

“I sprayed the roses with water”

We can imagine that hearing such multiple examples allows children to determine that there is a pattern, a rule. It could go like this : ‘if I can say “something actioned object a onto object b”, then I can equally say “something actioned object b with object a” ‘.

But that rule does not always work. For example :

While one can say

“I coiled a rope around the pole”

One cannot say

“I coiled the pole with a rope”

This is a very important issue, more than it first appears. We could try to explain that this is just an exception. But this itself poses another problem. How would children learn these exceptions ? They can determine patterns by listening to adults, but exceptions like the one above would require them to know what is NOT being said. The only way this could happen is for the child to make the mistakes, and get corrected. But this would require massive additional amounts of interactions which don’t seem realistic.

Fortunately there is another explanation offered by Steven Pinker.

We were looking for rules that would involve simple grammatical patterns. Instead it appears that the rule is about a mental picture of the action being performed. This is where the window into our underlying thought processes starts opening.

Our brain seems to classify verbs in categories that correspond to types of actions performed, but with distinctions far from obvious at first glance. For example, brush, plaster, rub, drip, dump, pour, spill all seem to pertain to getting some liquid or goo onto a receptacle. But the fact that you can “smear a wall with paint” while you cannot “pour a glass with water” tells you that you have to classify them in two different types of actions :

– the first type is when you apply force to both the substance and the surface simultaneously : brush, daub, plaster, rub, smear, smudge, spread, swab. You are directly causing the action, with a sense of immediateness.

– the second type is when you allow gravity to do the action : drip, funnel, pour, siphon, spill. You are acting indirectly here, and letting an intermediate enabling force do the work. There is also a sense of the action happening after you have triggered it, instead of immediately.

The fist type allows the “smear a wall with paint” construction, the second type does not.

Going further, the book explains how researchers have used non-existent verbs such as “goop”, telling children that it means wiping a cloth with a sponge, to test how they instinctively use these verbs, to test that the above rules apply … And they do !

So there IS a logical set of rules that explain how we talk, and these rules provide a glimpse into the mental models we use to conceive of reality, even before we verbalise our thoughts.

I hope I gave you a small taste of that fascinating piece of writing, and even more that you will become as curious as I am of this field of study. Another great book by the same author “How the Mind Works” attempts to explain the basic mechanisms we use to think, it is maybe an easier read, but is equally mind-provoking and fascinating.

What will it be for you ? Read one of these books and let me know !

I have recently finished a good book : “Whoops!” by John Lanchester. It is another of a long series of readings i have done over the past two years since the crisis hit. Like most of them it explains the root causes of the financial and economic crisis, and the lack of cure so far. It does so probably less accurately but in a more lively and entertaining manner.

One of the most interesting contentions of the author is that with the end of the cold war and the obvious failure of communism, capitalism found itself unchallenged as a paradigm. The most extreme supporters of free unbridled markets found themselves in a position of ideological monopoly, and were able to dismantle the apparatus of controls that had been put together by governments after the crisis of ’29 and the social safety nets built during the cold war at a time when communist countries were claiming to bring security and happiness to their citizens.

It is an interesting theory, but I am not entirely convinced by the logic of the arguments. It certainly fails to recognise the historical events that were the opening up of India and China and their return to the forefront of the international economic scene after two centuries of absence. It also does not mention the impact of an ageing western population, and especially of the baby-boom generation, creating a glut of retirement money chasing yields that a smaller number of younger people could not satisfy -unless that is one could find a way to hand out large numbers of real estate loans to folks who could never pay them back …

Still a very interesting read !

P.S. One particular paragraph in the book made me think hard … And not very successfully so far ! I searched the Internet a bit and found the following link which will show you how confusing some statistics can be.

Click here for link

Happy thinking !

Reading a great book “13 things that don’t make sense” by Michael Brooks. It throws great perspective into news you hear about dark matters and other current scientific pursuits. Bottom line we know much less than we pretend to, and there is experimental data that we have trouble fitting into our models. This reminds of scientists saying in the 19th century that everything had been discovered (and parallels in human sciences of “the end of History”). It is great and refreshing to see that we are but in the infancy of understanding the laws governing our world, and there is always going to be room for new Einsteins !